Titanic Kaggle Competition

Kaggle is a great service that hosts data science competitions. The Titanic challenge involves predicting which passengers survived based on redacted data. My final public score was 81.339% accurate (the 4th percentile globally). Access the repo here.

Overview

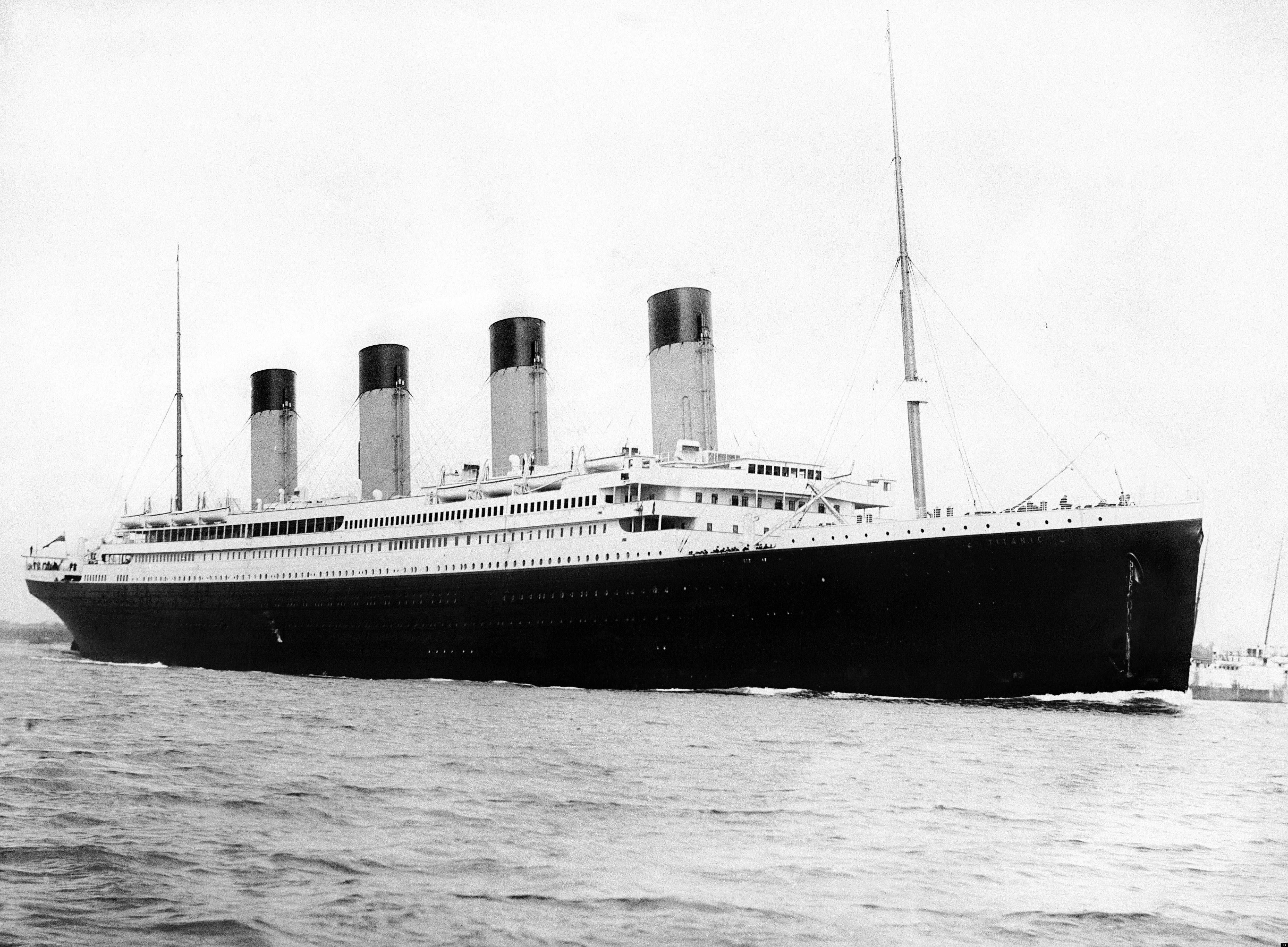

I shall consider the tragic history of the Titanic to be assumed knowledge. The this context the relevant information is that there were 2224 people on board when it sank. Of those people we have fairly complete data about who each of them were and significantly which survived.

For the challenge body of passengers is broken into 3 groups. The challenger is given all the data of the first group (the training set) and all the data of the second group (the test set) expect whether or not each passenger survived.

The challenge is to predict whether each passenger in the second (test) set survived based on the other data we have about them.

The data given for each passenger was:

Abridged Method

Brief: While it would be possible to make predictions based on any process this challenge is geared towards machine learning not psychic intuition so lets go with that. Machine learning is a prominent field of data science which involves constructing a model, training it on one data set (training set) and using it to predict properties of another (test set). Because I had never worked with the R programming language which is designed for data science projects such as this I decided to learn and use it here.

Feature Engineering: An optional but often critical step. The model will not know that class 1 is more privileged than class 3 but one can safely presume that if it's relevant the model will see that through simple correlation. Conversely there is relevant information in the name that a model would be oblivious to without some feature engineering.

(i) As an example a model would see no connection between two passengers named: "Smith, Mr. John" and "Smith, Mrs. Jane" since the entire alpha-numerical sequence didn't match. To that end it makes sense to break up the name field into 'name[0]' (surname) and name[1] (title). It would be interesting to investigate if given names had any observable impact but on a sample this size it seems unlikely.

(ii) Another example of sensible feature engineering would be to analyze the titles. The model wouldn't know that 'Mlle' is the french analogue to the english 'Miss' so we should conflate the two. Similarly there is one 'capt' and two 'Major's not enough to show a correlation in this data set so we should conflate those with any other senior military title we find.

(iii) Lastly and most intricately it is possible to reconstruct families, (those how many spouses / siblings and children / parents variables suddenly make sense). If someone is traveling alone they can be considered as a single. Conversely there are 8 passengers with the name 'Goodwin' each of whom is traveling with 7 family members so it's a safe bet each of them were members of the same family.

Imputation: Depending on the model that we use missing data might (or might not) be problematic, regardless of whether the algorithm in question can tolerate missing values certain values are easy to infer. For instance we don't have the fare for passenger 1044 but we know they traveled 3rd class from Southampton, if you graph the fares paid by other passengers traveling 3rd class form Southampton you see a massive narrow spike at 8.05 so it's reasonable to use that for their fare. Other fields like cabin were mostly empty so it's fair to discount them as being more trouble than they're worth.

Decision Tree (singular): Decision trees work in much the same way as a human mind might. For each of the fields they measure the the correlation between that field and the field we wish to predict. For the engineered training set that field is gender with a correlation of 54.34% compared to the correlation of survival with passenger ID of 0.50%

To apply that single decision tree to the test set would be to predict that all females survived and none of the males. Uploading that set of predictions receives an accuracy score of 76.55%. An interestingly high score but consistent with "Women and Children" being saved first. Apparently it wasn't just a line. Applying a first order decision tree with feature engineered data sets replaces gender with title as the best indicator of survival scores 80.38%. Which makes sense since most titles are gender specific but the title includes information about privilege as well.

Decision Trees (plural): To improve on the gender submission one could apply the decision tree recursively, that is to say rather than applying only the field with the highest correlation applying each in turn. For the female subset the greatest correlation might be with age and the male subset it might be the class of their ticket. Beyond that one would calculate the correlations for the first class male subset and find... Applying this algorithm by hand would take an impractical amount of time but that is where digital parallel processing power comes into it's own.

Random Forests: While decision trees are great they are liable to 'over-fitting' i.e. forming correlations are are only real within the test data set. To solve this problem one can use a random forrest. Random forests measure correlations across randomly selected subsets which simulates the conditions of making predictions out of the training set and reduces the noise of local coincidences.

Putting all these together gives us an accuracy score of 81.34%